Generating Statistics From Web Server Access Logs

This is an intermediate tutorial.

It is expected that you already have some familiarity with Linux, the command line, scripting, etc.

In one of my recent articles, “Life, Blog Stats, and {No} Profit”, I gave a breakdown on the number of visitors to my blog since I first created it in 2018. Today I want to focus on generating some of that data from the web server access logs themselves.

For that post, I quickly tossed together a quick bash one-liner to pull the number of unique addresses for me per day.

After that, I decided that I wanted to extend this a bit.

- Make it a repeatable process so I don’t have to hunt through my bash history to find the previous command I ran

- Provide additional stats then just the number of unique visitors per day

This of course meant writing a script, so let’s get to it : )

Table of Contents

The Log Files

On this server, the web server log files are stored as follows:

-

Logs are contained inside their own directories under /var/log/nginx based on their domain name

$ ls /var/log/nginx/ default techbit.ca totalclaireity.com -

Logs are split between “access” logs and “error” logs

$ ls /var/log/nginx/techbit.ca/ access error -

There are three “types” of log file names:

- The “current” access log

- Purpose: Recording the current entries from the running web server

- Filename: access.techbit.ca.log

- The previous days access log

- Purpose: At the end of the day, the “current” log file is renamed to have the current date appended to it, then a new “current” log file is started

- Filename: access.techbit.ca.log.[DATE]

- Logs older than 1 day

- Purpose: Logs older than one day are gzip compressed and named accordingly

- Filename: access.techbit.ca.log.[DATE].gz

- The “current” access log

The log files for “techbit.ca” are stored as follows:

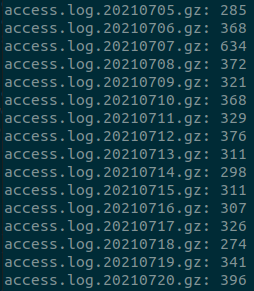

$ ls /var/log/nginx/techbit.ca/access/

access.techbit.ca.log access.techbit.ca.log.20211008.gz

access.techbit.ca.log.20210923.gz access.techbit.ca.log.20211009.gz

access.techbit.ca.log.20210924.gz access.techbit.ca.log.20211010.gz

access.techbit.ca.log.20210925.gz access.techbit.ca.log.20211011.gz

access.techbit.ca.log.20210926.gz access.techbit.ca.log.20211012.gz

access.techbit.ca.log.20210927.gz access.techbit.ca.log.20211013.gz

access.techbit.ca.log.20210928.gz access.techbit.ca.log.20211014.gz

access.techbit.ca.log.20210929.gz access.techbit.ca.log.20211015.gz

access.techbit.ca.log.20210930.gz access.techbit.ca.log.20211016.gz

access.techbit.ca.log.20211001.gz access.techbit.ca.log.20211017.gz

access.techbit.ca.log.20211002.gz access.techbit.ca.log.20211018.gz

access.techbit.ca.log.20211003.gz access.techbit.ca.log.20211019.gz

access.techbit.ca.log.20211004.gz access.techbit.ca.log.20211020.gz

access.techbit.ca.log.20211005.gz access.techbit.ca.log.20211021.gz

access.techbit.ca.log.20211006.gz access.techbit.ca.log.20211022.gz

access.techbit.ca.log.20211007.gz access.techbit.ca.log.20211023For the purpose of the script, we are only going to compute the stats from the gzip files.

Defining Our Expectations

The first step in any good script writing exercise is to define what we want the script to do. It’s a blueprint that we can then work through when we build the script.

I wanted my script to do the following:

- Use as many variables as possible so that if I have to update something in the future I can update it in a single place versus multiple places throughout the script.

- Be able to provide statistics for both domains hosted on this server: “techbit.ca” and “totalclaireity.com”

- It must remove as many erroneous log entries as possible before generating the statistics. Erroneous entries are things such as traffic from bots, automated web crawlers, exploit attempts, etc.

- Both of these websites are “static” content. So I’m only concerned with “GET” requests

- Must output the following:

- The name of the domain

- The number of days (equivalent to the number of log files)

- The total number of unique visits

- The lowest number of daily visits

- The highest number of daily visits

- The average number of daily visits

Writing the Script

1. The Variables

The variables in this script consist of both “fixed” and “fluid” variables. The fixed variables are used to define the criteria of our environment and how we’re going to search, while the fluid variables are used to store ‘temporary" values, such as when iterating through a “for” loop.

#!/bin/bash

DIR_LOGS="/var/log/nginx"

LOG_TYPE="access"

ARRAY_DOMAINS=("techbit.ca" "totalclaireity.com")

GREP_EXCLUDE="bot|php|login|solr|_ignition|wp-content|\.env|\.well-known|autodiscover|ecp|cgi|api|actuator|hudson|reportserver|getuser|\/console|wlc6|ftpsync\.settings|ab2g|ab2h|aspx|wp-admin|aaa9|\.git\/config|fgt_lang|server-status|\.json"

numDomains=${#ARRAY_DOMAINS[@]}Breakdown:

- DIR_LOGS

- The base directory where the web server logs are stored

- LOG_TYPE

- The “type” of log we want to search. In this case we’re going to look at the “access” log

- ARRAY_DOMAINS

- Stores the name of the domains to be used in the script in an array. This makes it a little bit easier/cleaner to use the same code to generate the stats for both domains

- GREP_EXCLUDE

- Defines what should be “excluded” from the logs before the stats are generated. I want to see stats for “actual people”, and not bots, crawlers, exploit attempts, etc. Each “term” is separated by a “|” (meaning “or”). Certain characters (such as “/” and “.") must be escaped with a “\”

- numDomains

- Stores the length of the ARRAY_DOMAINS array

2. Multiple Domains

We have more than one domain, and they’re stored in an array. So let’s use a “for” loop to access each element in the array so that an action can be performed.

If you need a primer on “for” loops, see my post Explaining For Loops Using Bash.

# Use a for loop to iterate through the domains in the array

for (( i=0; i<$numDomains; i++ ))

do

# Code to do something

doneThis is the “outer” for loop, which will run twice (because there’s 2 elements in the array).

# Use a for loop to iterate through the domains in the array

for (( i=0; i<$numDomains; i++ ))

do

# Current run number (keep in mind that arrays begin at position zero)

echo "Current pass through the loop: $i"

# Display the current array element

echo "Current element: ${ARRAY_DOMAINS[$i]}"

doneOutput

$ bash explain-get-web-stats.sh

Current pass through the loop: 0

Current element: techbit.ca

Current pass through the loop: 1

Current element: totalclaireity.com3. Pulling the Data

First, let’s create some variables in the outer loop to store temporary values. These variables will get reset every time the outer loop runs.

# Use a for loop to iterate through the domains in the array

for (( i=0; i<$numDomains; i++ ))

do

# Zero out temp variables at start of loop

len=0

sum=0

low=0

high=0

avg=0

numIP=()

# Build the file path

file="$DIR_LOGS/${ARRAY_DOMAINS[$i]}/$LOG_TYPE/$LOG_TYPE.${ARRAY_DOMAINS[$i]}*.gz"

# Echo current status

echo "Calculating stats for ${ARRAY_DOMAINS[$i]}..."

echo ""

doneBreakdown:

- len=0

- Used to store the “length” or number of elements in an array

- sum=0

- Used to store the total sum of unique IPs

- low=0

- Used to store the lowest “count” of IPs. This will be the lowest number of unique visitors during a one day period

- high=0

- Used to store the highest “count” of IPs. This will be the highest number of unique visitors during a one day period

- avg=0

- Used to store the average number of visits per day

- numIP=()

- Array used to store the “count” of IPs per day. Storing these counts in an array makes it much easier to pull the high, low, sum etc.

- file=

- We’re building the file path we want to “search” from the previously defined variables

- ex: During the first pass through of the outer loop, the file variable will be built from

- $DIR_LOGS/${ARRAY_DOMAINS[$i]}/$LOG_TYPE/$LOG_TYPE.${ARRAY_DOMAINS[$i]}*.gz

- Which will expand to:

- “/var/log/nginx/techbit.ca/access/access.techbit.ca*.gz”

Before we can go pulling data from our logs, we need to determine how to parse the log data.

Here’s a snippet of what my access log looks like:

- Note: IPs have been truncated for this example.

3.142.x.x - - [26/Oct/2021:08:03:42 +0000] "GET /2021/07/does-the-turbo-make-the-mazda3-sport-sporty-again/ HTTP/1.1" 200 7902 "-" "Mozilla/5.0 (Windows NT 10.0; Win64; x64) AppleWebKit/537.36 (KHTML, like Gecko; compatible; BW/1.1; bit.ly/2W6Px8S) Chrome/84.0.4147.105 Safari/537.36"

66.249.x.x - - [26/Oct/2021:08:49:57 +0000] "GET /2021/07/does-the-turbo-make-the-mazda3-sport-sporty-again/ HTTP/1.1" 200 7902 "-" "Mozilla/5.0 (Linux; Android 6.0.1; Nexus 5X Build/MMB29P) AppleWebKit/537.36 (KHTML, like Gecko) Chrome/94.0.4606.81 Mobile Safari/537.36 (compatible; Googlebot/2.1; +http://www.google.com/bot.html)"

40.77.x.x - - [26/Oct/2021:09:03:22 +0000] "GET /2021/10/freenas-mini-os-drive-upgrade HTTP/1.1" 301 162 "-" "Mozilla/5.0 (compatible; bingbot/2.0; +http://www.bing.com/bingbot.htm)"

40.77.x.x - - [26/Oct/2021:09:03:23 +0000] "GET /2021/10/freenas-mini-os-drive-upgrade/ HTTP/1.1" 200 8085 "-" "Mozilla/5.0 (compatible; bingbot/2.0; +http://www.bing.com/bingbot.htm)"

5.249.x.x - - [26/Oct/2021:09:26:20 +0000] "GET /2021/01/fixing-broken-symlinks-with-find-and-replace/ HTTP/1.1" 200 12259 "https://www.google.com/" "Mozilla/5.0 (Windows NT 10.0; Win64; x64) AppleWebKit/537.36 (KHTML, like Gecko) Chrome/94.0.4606.81 Safari/537.36"The log snippet above contains five entries. Two of which are unique visitors and the other three are “bots” from Google and Bing that are indexing my site so that my pages can be found in search results.

The only traffic I care about for generating the stats is the unique visitors, so to get that data I need to filter out as much of the erroneous data as I can.

GREP_EXCLUDE="bot|php|login|solr|_ignition|wp-content|\.env|\.well-known|autodiscover|ecp|cgi|api|actuator|hudson|reportserver|getuser|\/console|wlc6|ftpsync\.settings|ab2g|ab2h|aspx|wp-admin|aaa9|\.git\/config|fgt_lang|server-status|\.json"I also only care about “GET” type requests, and only want to extract the IP address from the log so I can count it as a visitor.

Since there are multiple log files to search through, we’re going to need another for loop “nested” inside our first one.

# Use a for loop to iterate through the domains in the array

for (( i=0; i<$numDomains; i++ ))

do

...

# Use a for loop to count the unique IPs for each daily log and store the results in an array

for f in $file

do

numIP=$(zgrep -i -v -E "$GREP_EXCLUDE" $f | grep "GET" | cut -d ' ' -f 1 | awk '!a[$0]++' | wc -l)

numIP+=($numIP)

done

doneBreakdown:

- for f in $file

- For every file that matches the criteria, perform that actions of the “for” loop

- numIP=$()

- Store the results of the command in the array for further processing

- zgrep -i -v -n

- Use zgrep because the search is being performed on gzipped compressed files

- Case insensitive search

- Return everything but the found results

- Used extended grep patterns for the search

- | grep “GET”

- Pipe the output of the first grep to another grep, looking only for “GET” requests

- | cut -d ' ' -f 1

- Pipe the output to the cut command, use space as a delimiter, and pull field “1”

- | awk ‘!a[$0]++'

- Pipe the output to the awk command to delete any duplicates (thereby leaving only one entry per IP)

This will produce a count of IPs for each log entry, which represents one day. The array for one domain will look like this:

[254,118,241,253,241,226,246,291,267,220,221,227,219,275,267,281,225,221,311,207,243,266,238,261,270,222,215,239,229,227,255,257,254]I have no idea why, but the number of entries in the final log file is entered into the array twice. Once at the end of the array (where it should be), but also at position zero (where it should NOT be).

If anyone has any insight into why this is happening please let me know in the comments below : )

4. Generating the statistics for each log

Everything we need is now contained in the numIP() array. This array is reset at the beginning of every pass through the outer loop, so we have to put our code in the outer loop to calculate the statistics before the data disappears. This will give us the data for each domain.

for (( i=0; i<$numDomains; i++ ))

do

...

# Use a for loop to count the unique IPs for each daily log and store the results in an array

for f in $file

do

...

done

# Get the length of the array containing the number of IPs

len=${#numIP[@]}

# Set the low and high values to the second position in the array

low=${numIP[1]}

high=${numIP[1]}

doneFirst we need to set some variables again.

Breakdown:

- len=$(#numIP[@]}

- This assigns the length (the number of entries) of the array to the variable len. We’ll use this in a for loop to iterate through the array values without going outside of the array and trigger an out of bounds error

- low=${numIP[1]} and high=${numIP[1]}

- This assigns the value of the second position to the “low” and “high” variables respectively

- We have to use the second position here numIP[1] in order to skip the final log double entry in numIP[0]

Now we can use another “for” loop to loop through the numIP array to calculate the stats:

for (( i=0; i<$numDomains; i++ ))

do

...

# Use a for loop to count the unique IPs for each daily log and store the results in an array

for f in $file

do

...

done

...

# Use a for loop to iterate and calculate the sum of the IPs

# Note, the first position in the array also contains the last array value

# This throws off the sum and average so we have to skip 0 and start at 1

for (( j=1; j<$len; j++ ))

do

# Calculate the sum of the IPs in the array

sum=$(echo "$(($sum + ${numIP[$j]}))")

# Determine the lowest number per day

(( ${numIP[$j]} < low )) && low=${numIP[$j]}

# Determine the highest number per day

(( ${numIP[$j]} > high )) && high=${numIP[$j]}

done

# Compute the average by dividing the sum by the length of the array minus 1

# Use "scale" to round down to the nearest whole number as we can't have a "fraction"

# of a visit

avg=$(echo "scale=0; $sum / ($len -1)" | bc -l )

doneBreakdown:

- for (( j=1; j<$len; j++ ))

- Start “j” at 1, do until “j” is equal to $len (length of the numIP array), and iterate “j” by one each pass through the loop

- sum=$(echo “$(($sum + ${numIP[$j]}))")

- Sum up all the entries in the numIP array to get the total number of unique visits

- (( ${numIP[$j]} < low )) && low=${numIP[$j]}

- Compare the current value of the array element to the value of “low”. If it’s less than the current value, update the value of “low” with the current array element

- (( ${numIP[$j]} > high )) && high=${numIP[$j]}

- Compare the current value of the array element to the value of “high”. It it’s greater than the current value, update the value of “high” with the current array element

- avg=$(echo “scale=0; $sum / ($len -1)” | bc -l )

- Calculate the average by dividing the total sum of the array elements by the length of the array minus 1 (to account for skipping position zero).

- scale=0 rounds down to the nearest whole number, as you can’t have a fraction of an IP address

- | bc -l Pipes to the “precision calculator” and uses the standard math library

5. Output the final statistics

for (( i=0; i<$numDomains; i++ ))

do

...

# Use a for loop to count the unique IPs for each daily log and store the results in an array

for f in $file

do

...

done

...

# Output the stats for the domain

echo "Domain: ${ARRAY_DOMAINS[$i]} "

echo "Total number of days: $(($len -1))"

echo "Total unique visits: $sum"

echo "Lowest daily unique visits: $low"

echo "Highest daily unique visits: $high"

echo "Average daily unique visits: $avg"

echo ""

doneSince we’re still in the outer “for” loop, we can echo out all the statistics that we calculated. No matter how many domains you add to the ARRAY_DOMAINS array, this method will expand and produce the statistics for each (provided the log files exist of course).

6. Full and final code

- bash

1 |

|

7. Output

After we run the script, we get the following output:

Calculating stats for techbit.ca...

Domain: techbit.ca

Total number of days: 32

Total unique visits: 7733

Lowest daily unique visits: 118

Highest daily unique visits: 311

Average daily unique visits: 241

Calculating stats for totalclaireity.com...

Domain: totalclaireity.com

Total number of days: 32

Total unique visits: 372

Lowest daily unique visits: 7

Highest daily unique visits: 21

Average daily unique visits: 11Conclusion

Realistically I could have made the script even more robust by adding in some additional checks (like checking to see if the log files exists before trying to grep them) as well as error handling, but here we are.

If you would like to take this and implement a similar script for your own web server, I leave the above as an exercise for you.

Happy scripting!!

If you have any questions/comments please leave them below.

Thanks so much for reading ^‿^

Claire

If this tutorial helped you out please consider buying me a pizza slice!